How Chatbot “Dr Tin” is Trained?

How Chatbot “Dr Tin” is Trained?

LEE Hon-ping

October 2021

The Hong Kong Observatory (HKO) launched in February 2020 the chatbot service "Dr Tin" which employs artificial intelligence (AI) to automatically answer a series of weather and astronomy related questions such as current weather, weather warnings, weather forecast, tidal information, Hong Kong standard time, weather forecasts of major world cities and sunrise or sunset time. The chatbot was well received with a monthly average of about 120 thousands of dialogues, and a rating of 4 or above out of 5 since launched. So, what is the working principle behind the chatbot? How does Dr Tin understand and respond to questions?

First of all, Dr Tin will classify the questions. HKO will prepare a batch of sample questions and their respective intents. Then a computer program will generate a learning model by applying supervised learning on the sample questions.

For example, HKO prepares a batch of questions related to temperature, like "What is the temperature in Causeway Bay?", "What is the temperature now?", "Is it hot", etc. and label them with "temperature" intent. Similarly, HKO also prepares questions related to other HKO’s services and labels them in advance to create a training data set.

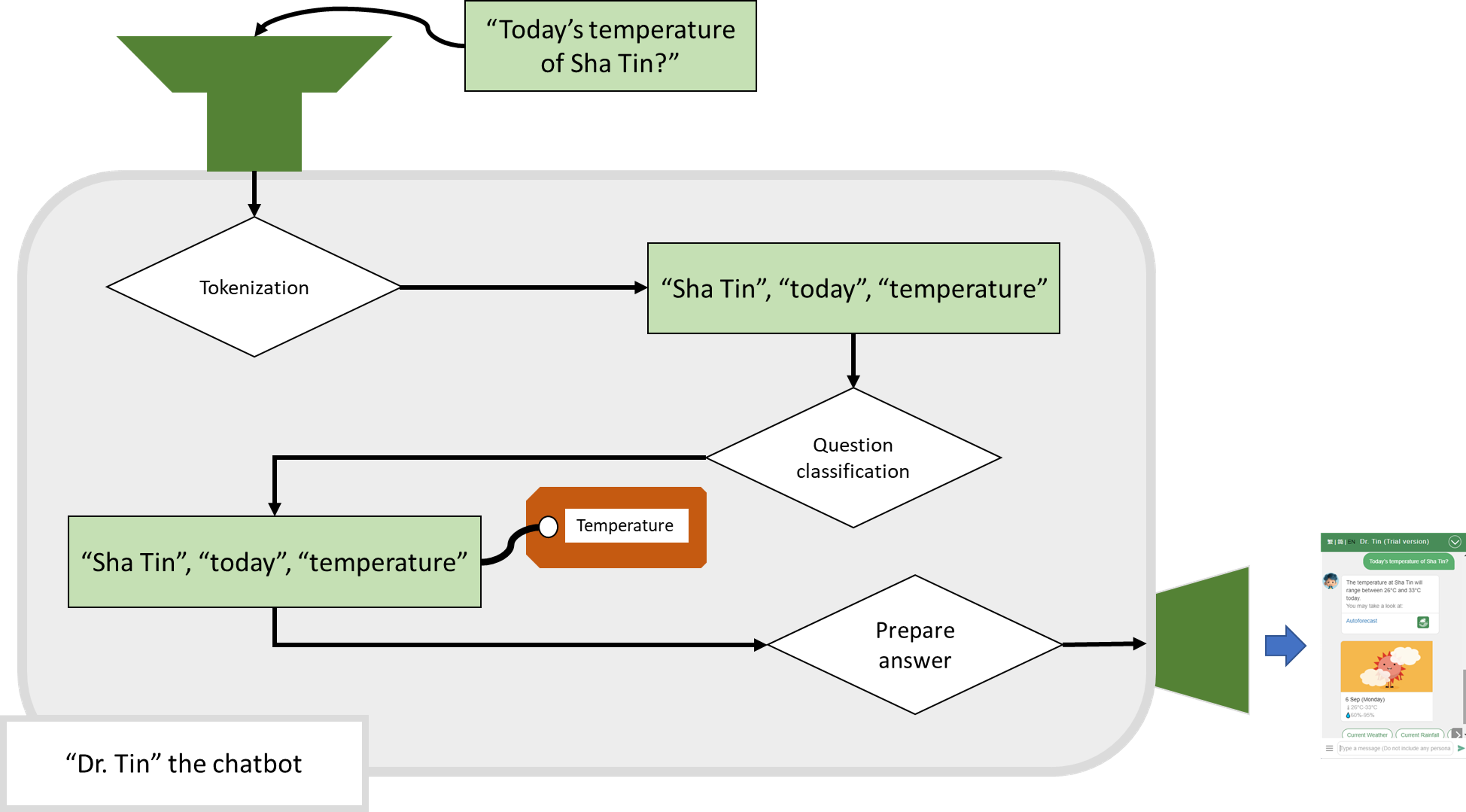

In order to enhance the training efficiency, tokenization will be applied to the question with the help of a tokenizer of Natural Language Understanding (NLU) engine.

| For example, the question "What is temperature of Hong Kong today?" has a number of ways of tokenization: | ||

| (i) | "What", "is", "temperature", "of", "Hong", "Kong", "today" | (7 tokens) |

| (ii) | "What is temperature", "of Hong", "Kong today" | (3 tokens) |

| (iii) | "What is temperature of Hong", "Kong today" | (2 tokens) |

| (iv) | "What", "is temperature of Hong Kong today" | (2 tokens) |

| (v) | "What is ", "temperature of", "Hong Kong", "today" | (4 tokens) |

Obviously, we adopt the (v) tokenization as all tokens in this tokenization are meaningful. The technical detail of tokenization depends on the NLU engine chosen. Then, we have a set of tokenized questions and their respective intent, i.e. a well-prepared training data set. With the use of AI program, a learning model for classifying questions can be developed.

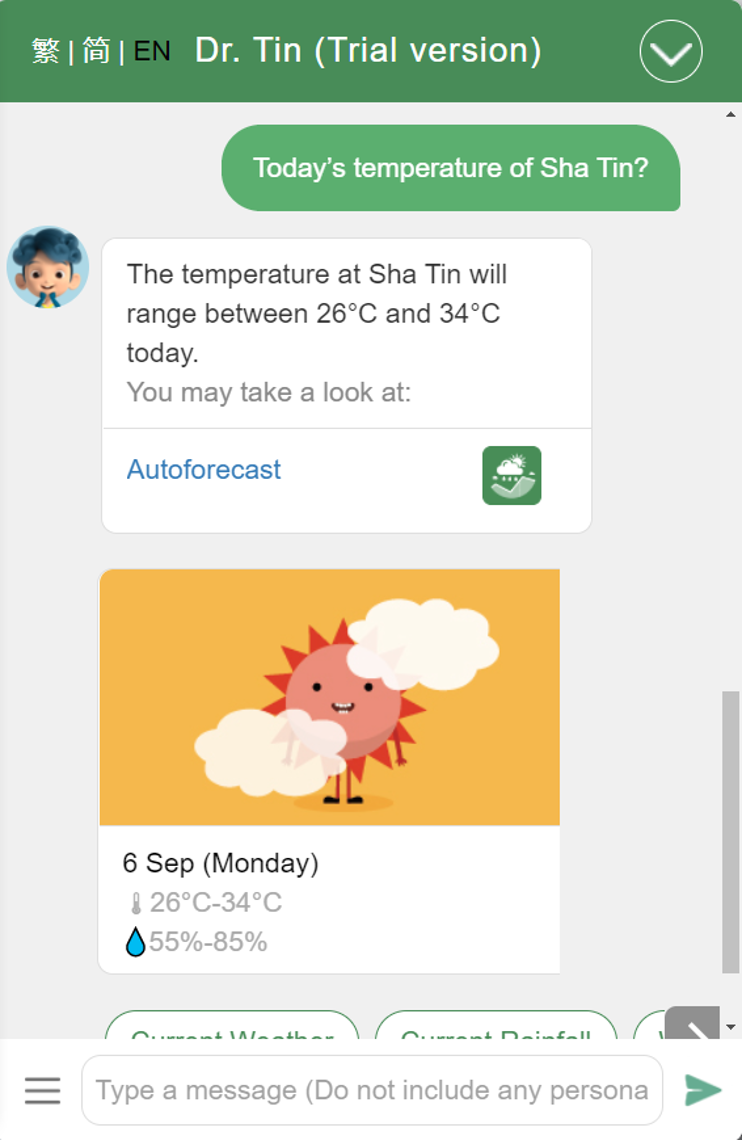

When there is a question, Dr Tin will use the learning model to find out a number of intents that the enquiry most probably belongs to, and assign the question to the intent with the highest score. For example, if someone asks "Today’s temperature of Sha Tin?", Dr Tin will first find out the tokens of the question, and use the prepared learning model to find out the score of the question associated with each intent. The intent possessing the highest score would be taken as the intent of the question:

| "Today’s temperature of Sha Tin?" --> "Sha Tin", "today", "temperature" | |

| Intent: | score |

| (1) Temperature: | 99.99… |

| (2) Irrelevant question: | 0.00… |

| (3) Sunrise and sunset: | 0.00… |

| (4) .... | |

Since the intent "temperature" has the highest score, Dr Tin will take "temperature" as the intent of the question.

Finally, the chatbot will further extract entities from the questions to prepare an answer. In the example above, "Sha Tin", "today" and "temperature" are the values of entities "location", "time", and "temperature" respectively. As "today" is a time period, Dr Tin will find out today’s maximum and minimum temperature of Sha Tin to prepare the answer for the user.

Fig. 1: A sample dialogue with "Dr Tin" in chatbot

Fig. 2: Work flow of the chatbot